6.8300 Final Project: Taming CLIP’s Captioning Bias: A COCO-Driven Analysis and Permutation Ensemble

Published:

Abstract: Vision-language models like CLIP struggle with multi-object scenes, often favoring prominent objects or those mentioned first in captions. Using real-world COCO images, we show that CLIP’s caption-matching accuracy drops from 91.23% to 87.45% when object order is reversed. To address this, we explore a post-hoc mitigation: a permutation ensemble that averages scores across all object orders, boosting robustness and recovering accuracy to 90.04%. Our findings reveal persistent order biases and offer a simple, effective strategy to improve CLIP’s reliability in complex scenes.

Generated by GPT-4o. Prompt: Please create a widescreen cover image illustration for my blog post. Please illustrate a “humanized” CLIP model being unsure about which caption best describes the attached image. The captions are: “a pizza and a dog and a dining table”, “a dining table and a dog and a pizza”,”a pizza and a dog and a cell phone”. Please include the provided image along with three captions in the generated image!

Introduction

Accurate image captioning, a key challenge at the intersection of computer vision and natural language processing, is fundamental for enabling machines to “see” and describe visual content. This capability underpins diverse applications, from accessibility tools that interpret images for visually impaired users to sophisticated content-based image retrieval and the scene understanding required by autonomous systems. Real-world photographs, the primary input for many computer vision tasks, typically depict complex scenes with multiple objects of varying sizes, categories, and semantic importance. These multi-object scenarios present a significant hurdle for vision-language models, which must accurately identify, localize (implicitly or explicitly), and then articulate all relevant entities in a coherent textual description. Datasets like Microsoft COCO Lin et al., 2014 are crucial benchmarks in computer vision as they exemplify this complexity by providing richly annotated, multi-object scenes. Understanding how advanced models such as CLIP Radford et al., 2021—which learns joint embeddings from visual and textual data—handle these intricate visual interactions is therefore critical for improving the robustness, fairness, and overall performance of computer vision systems that aim to bridge the gap between pixels and semantics.

Literature Review

Biases in Multi-Object Vision–Language Models

Recent research has highlighted that vision–language models, especially CLIP (Radford et al., 2021), exhibit notable biases when processing images containing multiple objects. These biases manifest primarily through the models’ tendencies to prioritize larger, visually dominant objects and objects mentioned earlier in captions (Abbasi et al., 2025). Specifically, Abbasi et al. constructed controlled benchmarks (SimCO, CompCO) to analyze how varying object size and caption order affect CLIP’s image–text matching accuracy. Their results demonstrated that CLIP disproportionately focuses on larger objects visually, and textually prioritizes the first-mentioned object, significantly impacting multi-object captioning tasks.

Kamath et al. (2023) similarly explored CLIP’s limitations, revealing that its text encoder bottlenecks compositional information, causing failures in accurately representing object relationships, attribute–object bindings, and counts (Kamath et al., 2023). For instance, CLIP struggled to differentiate semantically nuanced phrases like “a red square and a blue circle” from “a blue square and a red circle,” indicating weak compositional grounding.

Further studies on quantity biases reinforce these findings. Zhang et al. (2024) found that CLIP embeddings inadequately capture numerical object counts, leading to downstream errors such as incorrect object numbers in image generation (Zhang et al., 2024). These biases collectively suggest that CLIP’s current embedding strategies offer limited fidelity for multi-object representation and grounding, emphasizing dominant objects while neglecting detailed compositional relationships.

Evaluation Methods and Datasets

To systematically study these biases, researchers have developed specialized datasets and analytical methods. Abbasi et al.’s (2025) SimCO and CompCO datasets provide controlled synthetic and real-world image–caption pairs to precisely manipulate object prominence and mention order, making explicit the biases inherent in CLIP’s visual and textual encoders.

Other benchmarks like Winoground (Thrush et al., 2022) and CREPE (Yuksekgonul et al., 2023) specifically target relational and compositional understanding, exposing how small lexical or structural changes (e.g., swapping object positions in captions) significantly disrupt CLIP’s accuracy. Complementing these datasets, Chen et al. (2023) introduced gScoreCAM, a visualization technique that generates attention heatmaps highlighting CLIP’s focal regions within images (Chen et al., 2023). Through such visualizations, researchers confirmed that CLIP frequently fixates on the most visually prominent object, further validating previous analytical findings and providing intuitive diagnostics for compositional failures.

Mitigation Techniques

To address these compositional biases, researchers have proposed both architectural enhancements and novel training strategies. One notable approach is the integration of explicit object detection modules. For instance, MDETR (Kamath et al., 2021) and GLIP (Li et al., 2022) incorporate transformers trained to explicitly localize objects based on textual queries, thus promoting stronger grounding and compositional reasoning capabilities. These models substantially outperform traditional CLIP in tasks requiring precise multi-object correspondence.

Alternatively, Assouel et al. (2024) introduced OC-CLIP, an object-centric extension of CLIP designed specifically to enhance multi-object grounding. OC-CLIP utilizes slot-based object representations and graph-based scene parsing to achieve explicit bindings between image regions and caption components, significantly improving performance on compositional retrieval tasks (Assouel et al., 2024).

Training-centric solutions have also been explored, including contrastive fine-tuning with carefully constructed hard negatives, and augmenting datasets with synthetic compositional examples. While effective in improving benchmark scores, these data-driven approaches address the symptoms of CLIP’s biases rather than the fundamental architectural limitations, underscoring the importance of combining architectural and data-centric solutions for robust multi-object vision–language modeling.

Our take

In the scope of a class term project, we’d like to probe the issues and ideate a potential post-hoc fix.

Dataset - Microsoft COCO

For our analysis, we rely on the Microsoft Common Objects in Context (COCO) dataset—a widely used benchmark in computer vision research. COCO offers richly annotated images that capture complex everyday scenes, typically containing multiple objects of diverse categories, scales, and spatial relationships. This makes it well-suited for studying how vision-language models handle multi-object representations.

Each image in COCO includes multiple human-written captions and object-level annotations, including precise bounding boxes, object categories, and segmentation masks.

The dataset provides structured annotation fields such as:

image_id: Unique identifier for each imagebbox: A bounding box in[x, y, width, height]formatcategory_id: Object class index (mapped to names via the category list)

Defining Object Prominence

In our study, we define the prominence of an object in an image as the ratio between the area of the object’s annotated bounding box and the total area of the image. This simple yet effective geometric measure quantifies an object’s spatial dominance within the visual scene. Prominence serves as a proxy for visual salience, under the assumption that larger objects are more likely to be visually or semantically prioritized by both human observers and vision-language models.

Formally, for an object with annotation [x, y, width, height] in the COCO dataset, and an image of dimensions image_width and image_height, prominence is calculated as:

Granted, an annotated bounding box area is not equivalent to the actual object’s size because not every object in an image appears in a perfect rectangle. While the COCO dataset does provide more granular segmentation annotations, we use the bounding box area as a simple proxy for the actual prominence of an object in a given image.

By quantifying prominence in this way, we can analyze whether models like CLIP are biased toward larger objects in multi-object scenes, especially when generating or scoring captions that describe such images.

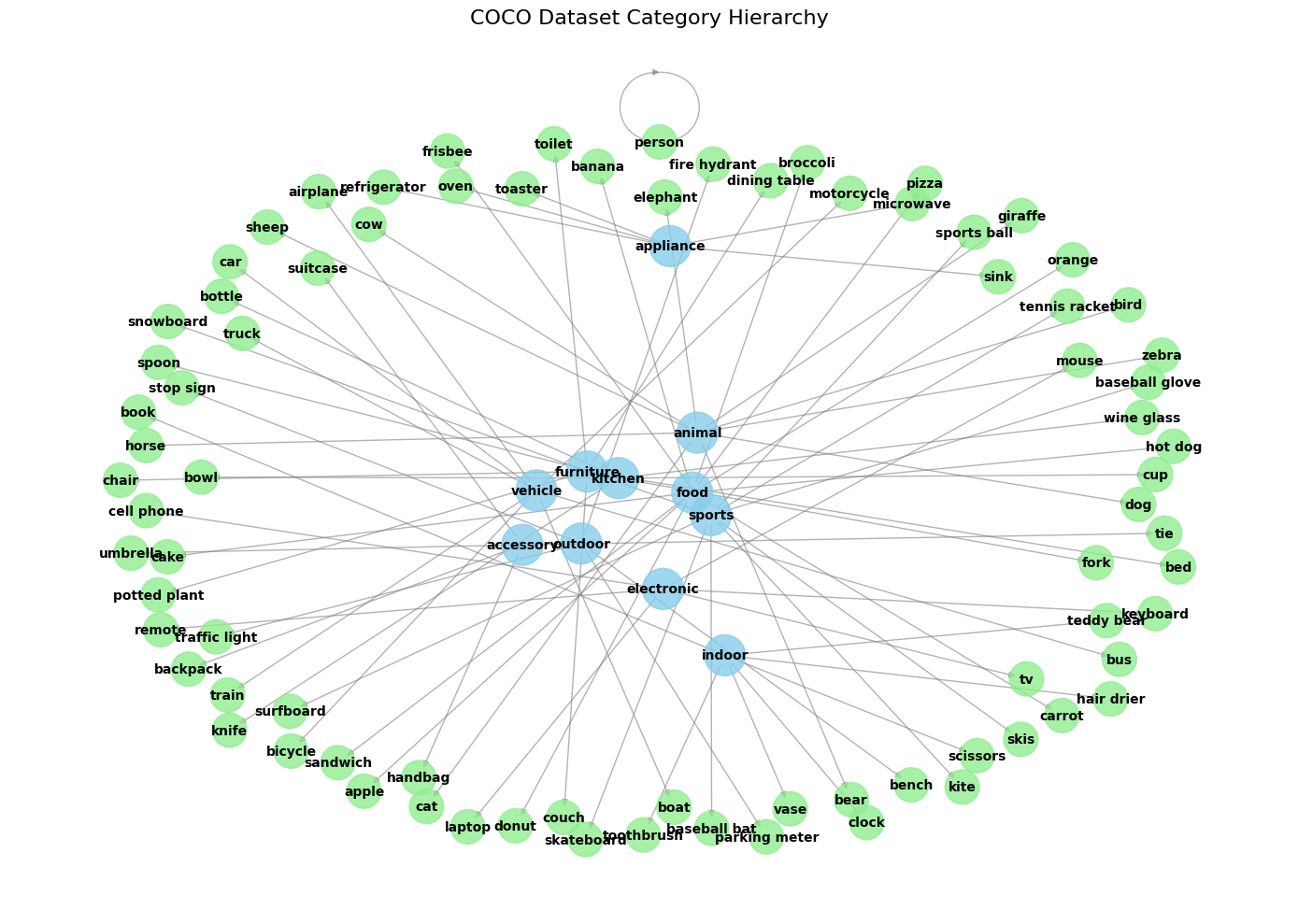

COCO Categories

There are 80 annotated categories in the COCO train2017 split. The diagram illustrates the hierarchical grouping of these object classes (shown in green) under broader super-categories (shown in blue), such as animal, vehicle, kitchen, and electronic. Each edge connects an object category to its corresponding super-category as defined by the COCO dataset’s metadata. This visualization highlights the diversity and complexity of the dataset.

Data Selection

In this project, we selected 502 images from the 2017 training set (train2017).

Although the COCO train2017 split contains 118K images, we had to select a subset based on the following criteria:

- Sufficient annotated objects per image: Our study focuses on multi-object scenarios, so images require a minimum number of objects. We required each image to have at least 3 annotated objects.

- Sufficient prominence per object: After a brief exploration of the COCO dataset, we noticed some annotated objects can be very small. This rule aims to exclude instances where, for example, a tiny corner of a microwave is annotated as “microwave.” We required each annotated object to have at least 10% prominence.

- Unique object categories per image: For example, constructing captions for images with multiple instances of the same object category (e.g., two “dogs”) would require disambiguation, which was outside the scope of this study. Therefore, we required all annotated objects in an image to belong to unique categories.

| Filter Criterion | Cumulative Images Remaining |

|---|---|

Initial train2017 set | 118K |

| >= 3 objects per image | 81,982 |

| Each object >= 10% prominence | 1,012 |

| All objects in unique categories | 502 |

Captioning Method

For each of the 502 selected images, we employed the following captioning process:

- Identify the top 3 most prominent objects. E.g.,

['airplane', 'truck', 'person']. The order of these objects was varied in subsequent experiments. - Generate a text caption by prefixing each object name with the appropriate English indefinite article (“a” or “an”) and conjoining them with “and”. E.g.,

"an airplane and a truck and a person".

Experiments

Inspired by the controlled multi-object bias study of (Abbasi et al., 2025), we evaluated whether the same prominence and order biases emerge when testing CLIP on real-world, high-resolution images from the COCO train2017 dataset rather than on synthetic benchmarks like SimCO and CompCO. Whereas Abbasi et al. precisely manipulated object size and mention order in crafted scenes, we selected 502 COCO images containing at least three objects (each occupying ≥10% of the frame) and generated paired captions that vary the order of the top-3 objects or replace one. This real-data pipeline—defining “prominence” as the ratio of bounding-box area to image area and introducing a “random third object” for incorrect caption variants—allows us to probe CLIP’s biases in complex, natural settings using a simpler, accuracy-based metric.

Our results show a drop in matching accuracy from 91.23% (largest-first correct vs. random-third incorrect) to 87.45% when the correct caption is presented in smallest-first order, closely mirroring the performance degradation that (Abbasi et al., 2025) observed when swapping object mention order in synthetic scenes. Although the absolute magnitude of the drop is slightly attenuated—likely due to COCO’s richer visual context—this concordance suggests that CLIP’s order bias persists beyond controlled datasets and into natural, multi-object environments.

1. Correct vs. Incorrect Captions with Largest Object First

We studied whether a less prominent object being misrepresented in a caption could mislead CLIP when scoring captions for the same image.

For each image, we constructed two captions:

- A correct caption that mentions the top 3 most prominent objects in descending order of prominence (largest first).

- An incorrect caption that mentions the two most prominent objects correctly (in descending order of prominence) but replaces the third object with a randomly chosen category (ensuring it is unique from the other two) from the COCO dataset.

For example, image 150410 (shown above) has the following three most prominent objects:

| Object | Prominence |

|---|---|

airplane | 0.252096262075527 |

truck | 0.2164387212748829 |

person | 0.10906725373243556 |

We produced the following two captions:

- Correct: “an airplane and a truck and a person”

- Incorrect: “an airplane and a truck and a chair“

With all 502 images captioned with a pair of correct and incorrect captions, we asked CLIP to score each image against its two captions.

Result

CLIP preferred the correct caption for 458 out of 502 images—a 91.23% accuracy.

Here is an example failure:

| Caption | CLIP Assigned Probability | |

|---|---|---|

| Correct | “an oven and a person and a microwave” | 27.20% |

| Incorrect | “an oven and a person and a snowboard“ | 72.80% |

This experiment suggests that while CLIP demonstrates a strong ability to identify correct multi-object captions (achieving 91.23% accuracy), its performance can be notably affected by inaccuracies related to less prominent objects. Instances where CLIP preferred an incorrect caption (where only the third most prominent object was altered) suggest that the model may not consistently ground or verify all listed objects with equal rigor. This implies that CLIP’s scoring mechanism may assign greater weight to dominant objects or that its compositional understanding is less robust for objects lower in the visual hierarchy.

2. Reordered Correct vs. Incorrect Captions

Building on the previous experiment, we studied whether CLIP’s caption scoring accuracy would further decrease if the correct caption listed objects from smallest to largest prominence.

For each image, we still constructed two captions:

- A correct caption that mentions the top 3 most prominent objects in ascending order of prominence (smallest first).

- An incorrect caption: The incorrect caption (with the randomly swapped third object) from the previous experiment was reused.

Still using the aforementioned image 150410 for example, the two captions were:

- Correct: “a person and a truck and an airplane” (objects are mentioned in reverse order of prominence compared to the previous experiment’s correct caption)

- Incorrect: “an airplane and a truck and a chair“

Result

CLIP preferred the correct caption for 439 out of 502 images—an accuracy of 87.45%, lower than the previous experiment.

Although the absolute number of correctly-captioned images dropped by 19 (from 458 in the first experiment to 439 in this one), the change in performance is more intricate than a simple drop:

- 5 images whose incorrect captions were preferred by CLIP in the previous experiment: CLIP now preferred the correct caption.

- 24 images whose correct captions were preferred by CLIP in the previous experiment: CLIP now preferred the incorrect caption.

Image 273083 exemplifies one of these 24 cases where performance worsened:

| Caption | CLIP Assigned Probability | |

|---|---|---|

| Previous experiment (Largest First Correct) | ||

| Correct | “a pizza and a dog and a dining table” | 53.12% |

| Incorrect | “a pizza and a dog and a cell phone“ | 46.88% |

| This experiment (Smallest First Correct) | ||

| Correct | “a dining table and a dog and a pizza” | 23.66% |

| Incorrect | “a pizza and a dog and a cell phone“ | 76.37% |

This experiment reveals that the order in which objects are mentioned in a caption significantly impacts CLIP’s scoring, especially when less prominent objects are listed first. The accuracy decrease from 91.23% to 87.45% suggests CLIP is less confident or accurate when a correct caption’s textual sequence inverts the visual prominence hierarchy (i.e., smallest object mentioned first).

The misclassification of 24 images (previously scored correctly) when the correct caption was reordered indicates that CLIP might rely on an alignment between early-mentioned objects in the text and the most visually dominant objects in the image. When this alignment is disrupted (as in “smallest-first” correct captions), even if all objects are factually present, CLIP is more likely to prefer an incorrect caption that begins by mentioning the most prominent objects, even if it contains a subsequent error. This highlights a potential vulnerability: CLIP’s image-text alignment may be disproportionately influenced by a caption’s initial elements, potentially overshadowing a complete assessment of all described objects, especially when textual order mismatches visual salience.

Mitigation: Caption Permutation Ensemble

To reduce CLIP’s sensitivity to the order in which objects are mentioned, we propose ensembling scores from all permutations of the 3 objects feature in a caption rather than relying on a single caption ordering. For an image \(I\) with top-3 objects \({o_1, o_2, o_3}\), the process is:

- Form the set of all \(M=3! = 6\) permutations \(P = {\pi_1,\ldots,\pi_M}\), where each is a tuple of three objects.

- For each permutation \(\pi_m\), generate a caption \(c_m\) same as in previous experiments.

Compute its text embedding and normalize: \(\begin{aligned} tm \;=\;\mathrm{CLIP}_{\mathrm{text}}(c_m),\quad \tilde t_m \;=\;\frac{t_m}{\|t_m\|_2}. \end{aligned}\)

- Average these \(M\) normalized embeddings to produce an order-invariant text representation: \(\begin{aligned} \bar t \;=\;\frac{1}{M}\sum_{m=1}^{M}\tilde t_m, \qquad \hat t \;=\;\frac{\bar t}{\|\bar t\|_2}. \end{aligned}\)

- Encode and normalize the image once: \(\begin{aligned} v \;=\;\mathrm{CLIP}_{\mathrm{img}}(I),\quad \hat v \;=\;\frac{v}{\|v\|_2}. \end{aligned}\)

- Compute the final similarity score as the cosine similarity between \(\hat v\) and \(\hat t\): \(\begin{aligned} s_{\mathrm{ens}} \;=\;\langle \hat v,\;\hat t\rangle. \end{aligned}\)

We want to underscore that permutation ensemble method is applied to both the correct set of objects and the incorrect set of objects, hence making this a generalized method to use while in the real world, we don’t know if a provided list of objects are all factually featured in a given image.

By comparing similarity score for the correct set of objects to a similarly computed ensemble score for an incorrect set of objects (where the third object is consistently swapped across its permutations, as in previous experiments), we expect this ensemble approach to smooth out ordering noise and yield a more robust decision boundary.

Result

| Experiment | Accuracy |

|---|---|

| Experiment 1 (without mitigation) | 458 out of 502 (91.23%) |

| With mitigation | 452 out of 502 (90.04%) |

| Experiment 2 (without mitigation) | 439 out of 502 (87.45%) |

The permutation ensemble mitigation yields 90.04% accuracy (452/502), which sits between our original largest-first baseline (91.23%) and the reversed-order worst-case (87.45%). Although ensembling all six correct-caption permutations does not quite match the peak performance of the best single ordering, it substantially mitigates the drop seen with adverse orderings, recovering 2.59 percentage points (from 87.45% to 90.04%) compared to the smallest-first scenario. This demonstrates that averaging over permutations effectively smooths CLIP’s sensitivity to object mention order, trading a small amount of peak accuracy for markedly improved robustness against ordering noise.

Conclusion

Our investigation into CLIP’s handling of multi-object scenes using real-world COCO images confirms that the model, while generally proficient, exhibits clear sensitivities to both the accuracy of object mentions and their textual order. We found that:

- CLIP is vulnerable to inaccuracies concerning less prominent objects. While achieving a high accuracy (91.23%) when the most prominent objects were correctly listed first, errors in identifying the third object could still mislead the model, suggesting a potential hierarchy in how CLIP grounds objects.

- Object mention order significantly impacts CLIP’s judgment. Reversing the caption order to list the smallest prominent object first (Experiment 2) led to a notable drop in accuracy to 87.45%. This demonstrates an “order bias,” where CLIP appears to favor captions that align with a “largest-first” heuristic, even if an alternative ordering is equally correct.

- Permutation ensembling offers a viable mitigation strategy. By averaging the embeddings of all possible permutations of a correct object set, we achieved an accuracy of 90.04%. This approach successfully smoothed out the negative impact of unfavorable orderings, substantially recovering performance from the worst-case scenario (87.45%) and providing a more robust, order-agnostic representation. While this came at the cost of a slight dip from the optimal single-order accuracy, the gain in robustness is significant.

These findings underscore the importance of considering object prominence and mention order when evaluating or deploying vision-language models like CLIP in complex, multi-object environments. While CLIP’s zero-shot capabilities are powerful, its internal biases can lead to performance variations that might be critical in real-world applications. The permutation ensemble method offers a practical step towards more reliable multi-object caption scoring, trading a small amount of peak performance for improved consistency.

Future work could explore a much bigger dataset size and more sophisticated ensembling techniques, investigate the architectural underpinnings of these biases within CLIP, or develop training strategies that inherently reduce such sensitivities, paving the way for even more robust and equitable vision-language understanding.

Acknowledgement

We want to credit and thank Professor Phillip Isola for suggesting the permutation ensembling post-hoc approach during an insightful discussion about the project. It was truly an enlightening moment!

Special thanks to the Spring 2025 6.8300 teaching team:

- Professor Vincent Sitzmann for redesigning the course to be more relevant and for consistently bringing amazing energy to each lecture.

- Our wonderful TAs, Vivek Gopalakrishnan, Isabella Yu, and Adriano Hernandez, providing invaluable feedback on this project and being open to engaging conversations about academic life.